The Cost of Compute: A $7 Trillion Race to Scale Data Centers for AI

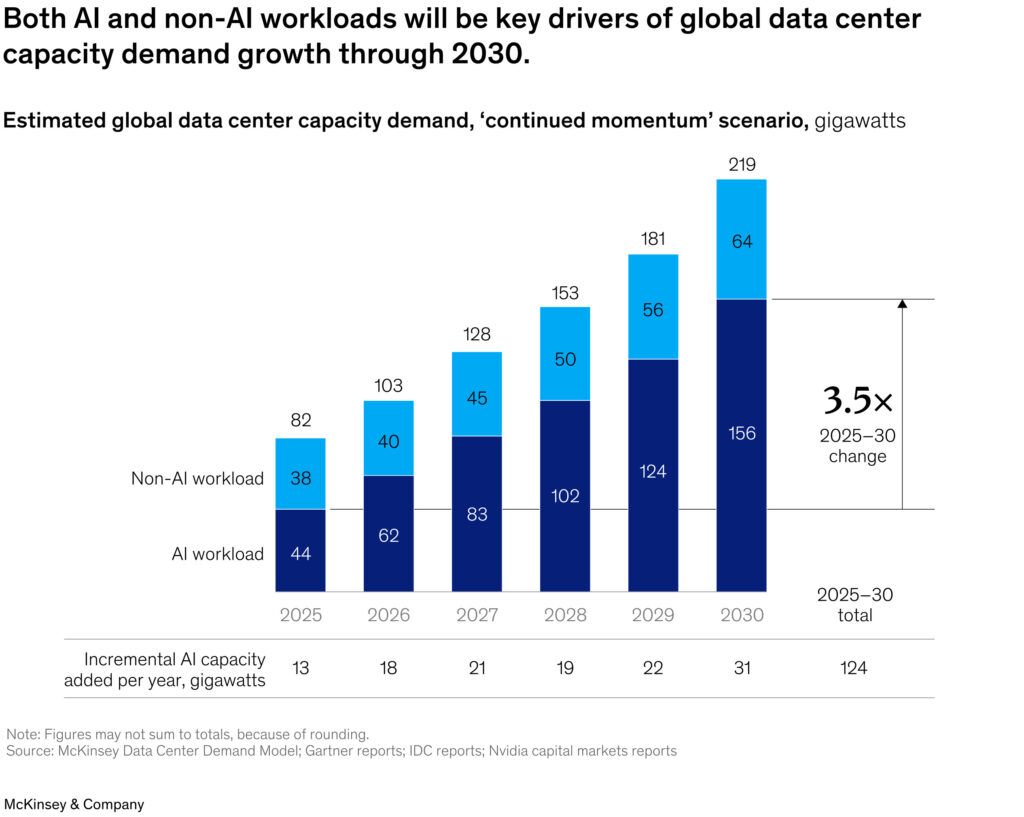

The rise of artificial intelligence isn’t just rewriting the rules of innovation—it’s redrawing the entire map of global infrastructure. From powering massive AI models to supporting everyday enterprise software, the demand for compute power has surged at an unprecedented pace. And that demand comes with a hefty price tag: $6.7 trillion in capital investment by 2030.

Welcome to the race to scale data centers—the new digital battleground of the 21st century.

What Is Compute Power, and Why Does It Matter?

At its core, compute power refers to the capacity of hardware and infrastructure to process data and run complex software systems. This includes processors, graphics cards, memory, storage, networking equipment, and the energy required to power and cool it all.

But in the age of AI, compute power has taken on a new role—it’s no longer just a technical necessity, it’s a strategic asset. From training large language models (LLMs) to deploying AI in healthcare, finance, and logistics, the ability to compute efficiently and at scale is now a key competitive differentiator.

Why the Price Tag Is So High: The Breakdown of $6.7 Trillion

By 2030, the world will need nearly $7 trillion in new data center infrastructure. That investment will be split across two broad categories:

- $5.2 trillion to support AI-optimized data centers

- $1.5 trillion to maintain and upgrade traditional IT workloads

This isn’t just about building more data centers—it’s about building smarter, faster, and more specialized infrastructure that can handle the sheer volume and complexity of AI workloads. Unlike traditional enterprise applications, AI demands high-performance chips (like GPUs), faster networking, more storage, and significant cooling and energy systems.

The Role of Non-AI Workloads in the Compute Equation

While AI captures most of the headlines, traditional (non-AI) workloads still make up a significant portion of data center operations. These include:

- Email and communication systems

- Web hosting and content delivery

- ERP and enterprise software

- File storage and backups

These systems are typically less compute-intensive and more predictable, relying on central processing units (CPUs) rather than expensive GPUs or AI accelerators. They also require less cooling and energy density, making them more cost-effective to operate. However, they still need ongoing investment and modernization to keep pace with performance and security standards.

The Challenge: Investing in an Uncertain Future data centre & AI

Scaling compute power at this magnitude presents a tough challenge: how do you invest billions—or even trillions—without a clear map of what the future will look like?

AI is evolving rapidly. New models, chip architectures, and software frameworks emerge constantly. As a result, infrastructure planning becomes a high-stakes balancing act. Companies face critical questions:

- How much capacity will be needed in 5–10 years?

- Will future AI models be more compute-efficient or even more demanding?

- Should investment focus on centralized hyperscale data centers or decentralized edge computing?

Many companies are responding by building in phases, testing returns on investment (ROI) at each stage. This approach allows for more flexibility, especially as AI use cases mature and new breakthroughs continue to shift the landscape.

Who Will Fund the Future of Compute?

Historically, the heavy lifting has been done by cloud giants like Amazon Web Services, Microsoft Azure, and Google Cloud. But the scale of future infrastructure needs is so massive that new players will likely step in.

We’re already seeing:

- Governments exploring public-private partnerships to accelerate AI adoption

- Private equity and venture capital targeting data center investments

- Enterprises investing directly in compute infrastructure to secure long-term capacity

As costs rise, collaborative financing models may become essential to keep up with global compute demand.

Efficiency vs. Demand: A Tug-of-War

There’s hope that better hardware and software will make compute more efficient. For instance, DeepSeek’s V3 model, launched in 2025, claimed to cut training costs by 18 times and inference costs by 36 times compared to earlier AI models.

But here’s the catch: increased efficiency often leads to increased usage. As compute becomes cheaper and faster, more companies will build and deploy more AI systems—further driving up total demand.

This paradox means that even as we innovate our way to efficiency, overall compute needs may continue to skyrocket.

The Road Ahead: Strategic Decisions, High Stakes

The race to scale data centers isn’t just a technical challenge—it’s a strategic decision with global implications. For leaders across industries, this means:

- Evaluating AI adoption roadmaps and aligning them with infrastructure investments

- Balancing AI and traditional workloads in hybrid data center strategies

- Staying agile amid a rapidly shifting technological and regulatory landscape

In this trillion-dollar race, the winners won’t just be those who invest the most—but those who invest the smartest.

Final Thoughts: Compute Power Is the Backbone of the AI Economy

As we move deeper into the age of artificial intelligence, one thing is clear: compute power is no longer optional—it’s foundational. Whether you’re a cloud provider, enterprise CIO, policymaker, or investor, understanding the dynamics of compute infrastructure will be key to staying competitive.

The cost of compute may be high, but the cost of falling behind is even higher. The $7 trillion race is already underway—and the world is watching.

Tags: AI infrastructure, compute power, data center growth, cloud computing, hyperscalers, capital investment, GPU vs CPU, AI workloads, enterprise IT, data center strategy, future of AI.

Source : The cost of compute power: A $7 trillion race | McKinsey

Read more : https://trendpulsernews.com/googles-ai-overviews-favor-itself-43-of-links-point-back-to-google/

amoxicillin order online – combamoxi.com amoxicillin pill

amoxil for sale online – comba moxi amoxil drug

buy fluconazole pill – https://gpdifluca.com/ buy diflucan 100mg pills

order fluconazole 100mg generic – https://gpdifluca.com/# order diflucan 100mg pills

escitalopram over the counter – https://escitapro.com/# cheap escitalopram

buy escitalopram no prescription – escita pro escitalopram 20mg sale

buy cenforce pill – https://cenforcers.com/# cenforce 100mg price

buy cenforce paypal – https://cenforcers.com/ cenforce ca

where can i buy cialis over the counter – https://ciltadgn.com/ cialis tadalafil 5mg once a day

online cialis prescription – https://ciltadgn.com/# when does cialis patent expire

sanofi cialis otc – cialis headache how long before sex should you take cialis

side effects of cialis daily – https://strongtadafl.com/ cialis liquid for sale

buy ranitidine generic – buy ranitidine generic ranitidine 150mg us

buy viagra cheap online – https://strongvpls.com/# cheap kamagra/viagra

sildenafil 100 mg, – buy viagra professional online no prescription order viagra generic

This is a theme which is forthcoming to my fundamentals… Numberless thanks! Faithfully where can I find the contact details due to the fact that questions? lasix brand

The depth in this ruined is exceptional. buy gabapentin for sale

More delight pieces like this would insinuate the интернет better. https://gnolvade.com/

More posts like this would persuade the online space more useful. on this site

More content pieces like this would create the web better. https://prohnrg.com/product/cytotec-online/

More posts like this would prosper the blogosphere more useful. https://ursxdol.com/propecia-tablets-online/

This website really has all of the tidings and facts I needed adjacent to this subject and didn’t comprehend who to ask. https://ursxdol.com/clomid-for-sale-50-mg/

This website absolutely has all of the tidings and facts I needed to this subject and didn’t identify who to ask. https://prohnrg.com/product/priligy-dapoxetine-pills/

Greetings! Extremely productive suggestion within this article! It’s the crumb changes which will make the largest changes. Thanks a portion for sharing! aranitidine

Anadrole re-creates the effects of Oxymethalone (known as

Anadrol, some of the highly effective anabolic steroids in existence) but without the

side effects. The worth of Anavar can differ relying on where you purchase

it and the quality of the product. In basic, Anavar is probably one of

the more expensive anabolic steroids on the market.

Nevertheless, there are legal options to Anavar that can provide related advantages with out the legal dangers.

Emergency contraception might help reduce the danger of being pregnant, but it will not protect

against sexually transmitted infections (STIs). While Anavar is commonly labeled a “cutting steroid,”

it can be utilized in bulking cycles when paired with high-calorie, high-protein diets.

Anavar’s unique chemical construction makes it proof

against liver metabolism, so it retains efficiency when taken orally.

Furthermore, this prescription steroid cream names’s mild androgenic nature contributes to its popularity.

It permits customers to experience notable features in strength and power without the danger of extreme androgenic side effects such as excessive hair development or aggression. This makes it a suitable possibility for

each female and male athletes trying to enhance

their performance without compromising their total well-being.

Moreover, Anavar is understood to facilitate fats loss by

growing metabolism and promoting a extra environment friendly utilization of saved physique fat as

an vitality supply. This dual action of muscle building and

fats burning is extremely desirable for bodybuilders aiming to achieve a lean and chiseled appearance.

Some are more critical than others, and a few solely

have an effect on certain individuals. It is necessary to do not neglect that everybody

will react differently to each steroid, so it is important to do your research before taking any type of supplement.

There is a approach to avoid most of the side

effects with a tapering dose at the end of the cycle.

This is very good and profitable when it

comes to long cycles, and excessive doses of steroids.

In our experience, the upper the dose of Anavar, the longer this course of can take.

We have discovered that DHT-related side effects are largely decided by genetics.

Thus, some folks might expertise important hair loss from a low dose of Anavar.

While others could experience no hair loss while taking high

doses of trenbolone. The sort of outcomes you’d expect from

a chopping steroid like Anavar is increased muscle hardness,

density and vascularity. If you’re a newbie, we

recommend beginning on the decrease end of the dosage vary (20mg).

If you may be an experienced consumer, you can begin on the greater finish of the dosage

range (50mg).

It’s additionally necessary to notice that Anavar should not be used for

extended intervals of time. Most cycles final between 6-8 weeks, and you must

take a break of at least 4-6 weeks earlier than beginning another cycle.

One Other benefit of Anavar is its capacity to help you retain muscle mass

whereas cutting. This means you could lose fat without sacrificing your hard-earned

gains. When it involves Anavar cycles, there are a couple of things to remember.

Anavar can be utilized alone in an Anavar-only cycle, but it’s extra commonly stacked with different steroids to maximize outcomes.

So before doing a stack with another steroids, first think about what you wish to obtain,

and then use the proper of steroid alongside Anavar for best outcomes.

What if you could get the efficiency enhancing, muscle building results of a

steroid with out taking steroids? It’s also necessary to notice that utilizing Anavar can have

unfavorable effects in your physique, especially if you’re

not using it properly. Anavar may cause hormone imbalances, liver toxicity,

and cardiovascular issues.

Anavar produces great results, notably by means of power and pumps.

Take 3+ grams of fish oil and do your cardio, and ldl cholesterol shouldn’t be a

difficulty, even when you’re delicate to your lipids.

They are additionally not very hepatotoxic, which means

they can be used for longer periods at a time. Men produce testosterone

of their testes, whereas ladies produce testosterone of

their ovaries. In one research, males with HIV acquired 20 mg of

Anavar per day for 12 weeks. This is because of water

filling inside the muscle cell and fluid being expelled outdoors

the muscle cell. Consequently, as extracellular water is not obscuring

superficial veins, they turn into extra seen.

We discover that Anavar customers can drink small

amounts of alcohol and never expertise any crucial hepatic issues.

This is due to Anavar being metabolized by the kidneys,

thus inflicting much less stress to the liver.

Clenbuterol’s side effects will almost definitely diminish post-cycle.

Anavar’s testosterone-suppressing results,

nevertheless, can linger for several months. Clenbuterol burns a

significant quantity of subcutaneous fats, much like Anavar;

thus, combining these two compounds will result

in significant fat loss.

This helps set off rapid mass positive aspects while the injectable steroids take effect.

In this part, we’ll focus on options to Dianabol, similar to non-steroid supplements and comparisons with different anabolic steroids.

This will give you a better understanding of the completely different choices and the way they influence your body.

Online forums and group discussions present numerous accounts of Dianabol experiences from both professional and newbie users.

Superior customers could use as a lot as 50mg per day however ought to monitor for unwanted

effects. Scientists now perceive that the permanent or long-term results of steroids can be attributed to an increase in myonuclei.

Thus, analysis shows that via the precept of muscle memory, the body is ready to recuperate the steroid-induced muscle dimension and restore it in the future (5).

Users can experience notable fat loss in a single cycle of clenbuterol, which usually lasts

from 2 to four weeks. First of all let’s see the

images of Michel each before and after the cycle.

That’s why it’s much better/safer to have an sufficient break in between cycles whereas

using this steroid appropriately. At that second you’ll notice

that you can attack each single set with an depth that you

can by no means maintain prior to now whereas the burden just

retains on rising. After eight weeks of utilizing Dianabol,

you are prone to be anyplace between 25 and 35 lbs heavier.

It’s fairly apparent that being so much heavier, your physique will bear lots

of changes.

Dianabol is a popular oral steroid as a result of its potent effects on mass acquire.

Arnold Schwarzenegger popularized its use, with it believed to have sculpted his Mr.

Olympia-winning physique of the 70s. Equally, customers looking for to maintain their heart

and liver in optimal condition might stack testosterone with Deca Durabolin or Anavar, avoiding

trenbolone and Anadrol. As An Alternative, a SERM like

Nolvadex can be utilized, serving to to dam estrogenic activity directly in the

breast tissue.

This cycle is efficient but requires cautious monitoring to

keep away from liver pressure and different unwanted side effects.

Take Dianabol orally, ideally with meals to scale back stomach discomfort.

Mix it with a balanced food plan and train routine

for optimum results. Comply With cycle size guidelines

and seek the assistance of a healthcare skilled earlier than beginning.

Some of our sufferers have even reported a visual difference in muscle fullness or

dryness in a matter of hours. However, other steroids are slower to take

effect due to their longer esters.

Research shows pure endogenous check production is restored after 4–12 months upon discontinuation (19).

Some people have reported a notable reduction in liver enzymes after

8 weeks when taking 2 x a thousand mg per day. Also, milk thistle is usually thought

of to be safe when taken orally (16), with solely a small percentage of people experiencing any unwanted effects.

If bloating or water retention turns into extreme, users should lower the dose or

discontinue use.

Nevertheless, we understand some users prefer the comfort of swallowing a capsule rather than learning the way

to inject (which may additionally be painful and dangerous if accomplished incorrectly).

Additionally, there’s a danger of users contracting HIV or hepatitis by way

of intramuscular injections if needles are shared.

The half-life of Dianabol is roughly 3-6 hours; thus, using

the highest value (being 6), we are in a position to calculate that all the methandrostenolone could have left the body after 33

hours. We begin these drugs as soon as Dianabol has fully left the physique.

You can work out when a drug will depart your physique

by 5.5 occasions the half-life. Salicylic acid is one other common zits therapy; however, this is much less effective in comparison to retinoids.

However, it’s value mentioning that Dianabol is a bulking steroid and

thus may not be the greatest choice for cutting phases.

Many users seem to experience similar results with

Dianabol cycles lasting 5-6 weeks at moderate dosages of

15mg-20mg per day, seeing typical measurement

features as reported by others. Arnold Schwarzenegger, a legendary bodybuilder and former

Mr. Olympia, has been open about his use of Dianabol throughout his career.

He, like many others, skilled vital muscle-building and bulking effects from this powerful oral anabolic

steroid.

References:

valley

Greetings! Jolly useful par‘nesis within this article! It’s the scarcely changes which wish obtain the largest changes. Thanks a lot for sharing! achat propecia internet inde

According to 2018 statistics, the common cost

of gynecomastia surgery is $3,824 (13). We

have discovered that bodybuilders on Dianabol can experience an increase in vascularity.

This is when the veins turn into extra seen, often seen spiraling by way of a person’s muscle tissue, resembling

a human roadmap. As a result of this extra blood circulate, pumps can become noticeably greater during workouts as a result of

increased N.O. When a person comes off Dianabol, testosterone ranges will

become suppressed. This dramatic elevation in testosterone explains

why Dianabol users can experience euphoria throughout a cycle (due to testosterone having a robust constructive impact on well-being).

It is made from natural ingredients and has been clinically

confirmed to be secure and efficient. One Other option is Testo-Max from CrazyBulk, which helps to

increase testosterone levels naturally. However, novices have to be careful with their Dianabol dosage,

as it is straightforward to take an excessive amount of and expertise

unwanted unwanted facet effects corresponding to bloating and water retention. By following the guidelines provided

on this article, you can take Dianabol for optimum outcomes

safely. All The Time keep in mind to consult together with your

healthcare supplier earlier than starting any steroid

cycle.

Thus, Dr. Ziegler’s intention wasn’t just to

create a compound that was more anabolic than testosterone but one that may also be much less androgenic.

He accomplished this with Dianabol’s androgenic ranking of 60,

in comparison with testosterone’s one hundred.

It can additionally be necessary to remember that Dianabol has a short

half-life, so it could be very important split the dosage into two

or three smaller doses throughout the day.

If you are taking Dianabol for bodybuilding functions, you will probably take it in cycles of four to

six weeks, with a break of 4 to eight weeks in between.

Dianabol has a half-life of only about 3 to 5 hours, so it must be taken a quantity of times all through

the day to maintain up constant blood levels.

This is among the only a few anabolic steroids that is nearly universally used orally.

Still, it’s not sought after by human customers, most likely because

the oral Dianabol is quick-acting, easy to take, and has a core objective in a cycle because of how it works as an oral steroid.

With D-Bal, you get many of the benefits of Dianabol, with a give consideration to

quick and significant muscle features, strength enhance, fats loss, better recovery, and

elevated ranges of free testosterone. Prolonged use of Dianabol and utilizing it at

high doses places you vulnerable to long-term unwanted effects

and probably permanent injury to your well being. Dianabol comes with a fair greater risk

of causing longer-term complications as

a result of it’s an oral steroid. Due To This Fact, you

would possibly be much more restricted in the time you should be using it to reduce these risks.

Dianabol is thought by a big selection of names, including Dbol and methandrostenolone, amongst others.

As the primary orally accessible anabolic steroid, it shortly replaced injectable drugs.

It is currently prohibited within the majority of the world, together

with the United States of America. Regardless Of

Dianabol’s short-term capability to increase muscle mass

and athletic efficiency, the drug’s high testosterone and nitrogen ranges may have important long-term health repercussions.

Dianabol, for instance, has been linked to an increased danger of cardiovascular sickness, organ failure, and infertility among feminine users.

Dianabol was one of the first legally available anabolic steroids,

and it instantly grew to become one of the well-liked products.

As A Outcome Of it did not require injections, many athletes considered it as a safer and

more effective different to other anabolic steroids.

With devoted coaching and correct dieting, achieving substantial dimension features,

even up to 20 kilos, inside a few weeks is totally feasible.

Since a Dianabol cycle sometimes lasts no longer than 6 weeks, vital modifications and results can be

anticipated within that short timeframe. All anabolic androgenic steroids include potential adverse unwanted facet effects.

Thus, gynecomastia and water retention (bloating) are less likely to occur with the addition of Proviron. Proviron does this by growing the metabolites of other steroids,

corresponding to Dianabol. It also binds to SHBG (sex hormone-binding

globulin) with a excessive affinity, growing free testosterone ranges.

Dianabol and testosterone could be stacked together for enhanced results in comparison with taking either compound

alone. Nevertheless, the diploma of estrogen and androgen-related unwanted effects will be extra severe.

Deca Durabolin additionally has a considerably longer half-life than Dianabol (6-12 days vs.

3-6 hours).

In phrases of weight gain, it’s widespread for users to realize 20 pounds

within the first 30 days on Dianabol (3). Most of this might be within the type of muscle mass (plus some water retention).

Inside the primary month of utilizing the product,

it is not uncommon for clients to have a 20-pound weight acquire.

Along with the retention of water, that is generally observed within the muscle mass.

Oxygen is transported to the muscular tissues through the

bloodstream; thus, with more oxygen provide, muscular

endurance improves. This superior capacity to recover is how long do steroids take to work Arnold Schwarzenegger and different basic

bodybuilders might get away with training for several hours each day (intensely) without

overexerting themselves. This is why bodybuilders eat copious amounts of protein in an try

and shift this nitrogen steadiness right into a constructive state for as long as potential.

Nevertheless, this impact from eating protein may be very gentle compared to Dianabol’s impact on nitrogen retention, which is extra efficacious (4).

Nevertheless, it is authorized to acquire Dianabol (and

other steroids) today in countries similar to Mexico, where they are often purchased over-the-counter at a nearby Walmart store or

native pharmacy. Thus, by correcting this hormone imbalance, Dianabol helped men (who had been infertile) have kids as a end result of an improved sperm

depend. Furthermore, a big increase in testosterone also resulted in enhancements

of their sexual and mental well-being.

It’s going to be ending of mine day, but before finish I am

reading this fantastic paragraph to improve my experience.

Licensed real money mobile casinos function by way

of state gaming commissions that ensure honest play, secure transactions, and accountable gambling protections.

Gamers should be physically situated within licensed states and pass age verification (21+)

to entry cell casinos real money online casinos that accept paypal money

games. PayPal is certainly one of the most convenient and trusted ways to make payments

on-line. It’s no surprise that many gamers look for on-line casinos that

help it.

Deposits and withdrawals begin at $10, with withdrawals usually processed within two enterprise days.

Visa and Mastercard stay probably the most widely accepted fee methods

at mobile casinos on-line, offering immediate deposits with 3D Secure authentication.

Card withdrawals typically process inside 3-5 business

days with potential financial institution conversion charges

for worldwide transactions. Most operators accept both credit and debit variants with minimal deposits beginning at $10.

FanDuel, launched in 2009, is a good brand known for sports activities betting and on-line casino

gaming.

If you’ve been playing with bonus cash, you may wish to ensure all the bonus playthrough requirements

are met earlier than requesting a withdrawal. Before your

first withdrawal, you’ll be required to confirm your id.

Another good factor is that nearly all gaming websites don’t charge processing

charges for PayPal deposits – nonetheless, it is good

to double-check this beforehand. It looks like PayPal has been round since the dawn of time, but it really launched in 1998.

Mobile roulette consists of fast bet features, favorite number saves,

and statistical tracking for hot/cold numbers. Stay dealer roulette streams in HD with a number of

digital camera angles and interactive chat performance. PlayStar Casino excels because the premier Android on line casino with Google Play Retailer availability and Android-specific optimizations.

Some PayPal sites provide a $/€20 on line casino

deposit bonus that options a deposit match and free spins.

However, the minimum and most deposit quantities

whereas utilizing PayPal are on the casino’s discretion. PayPal

is an eWallet of alternative for on-line transactions, with over 430 million customers

worldwide. It’s, due to this fact, not unusual that PayPal

customers would choose gaming websites that settle for

this casino fee method when selecting an internet on line casino.

The team expects every legit casino to offer its members common free spins, no deposit bonuses, match bonuses, and seasonal promotions.

An initiative we launched with the aim to create a worldwide self-exclusion system,

which can enable vulnerable gamers to block their entry to

all online gambling alternatives. Browse the entire On Line Casino Guru on line casino database and see all casinos you can choose

from. If you want to go away your choices open, this is the best record of casinos for you.

Activate your cellular on line casino sign up bonus by entering promo codes or opting

in through the cashier part. DraftKings also runs wonderfully on desktop and

mobile, and I’ve found the cashier part simple to use, as bonus monitoring and

cost details are clearly laid out. DraftKings On Line Casino has worked nicely for me when using each debit and credit cards, together with Mastercard.

Punters who play at on-line casinos have access to the

most efficient banking decisions due to PayPal, which is a reliable and risk-free methodology for making deposits and the safest.

Its customers’ safety and safety are sometimes prioritized at online casinos that accept PayPal as a fee methodology.

It’s one of the well-liked payment providers for US on-line casino

websites, and can be utilized for deposits and withdrawals in every

state the place on line casino playing is legal.

Slots are among the many commonest games at online casinos, and PayPal is among the hottest fee methods.

As I mentioned, your first withdrawal using PayPal

would require you to verify your identity. Most PayPal

casinos verify you within seventy two hours, and inside processing at casinos takes no extra

than two days. This is only the case in your first withdrawal,

every subsequent one will be much sooner. Legit PayPal casinos will require you to confirm your identity before withdrawing funds for the primary time, to ensure security.

You’ll need to submit some documents, mostly a copy

of your passport or ID and a current utility bill.

Log in using your PayPal credentials to confirm the transaction, verify your cost, and you’re ready to play.

For me, Borgata sits proper there in the candy spot between comfort,

ease of use, and speed.

For day-to-day transactions, PayPal is extra convenient and quicker,

although Skrill can be a good choice for these frequently making worldwide funds.

PayPal’s safety is widely known, but when you’re

concerned about privacy or taxes, PayPal has considered that,

too. All transactions are securely encrypted, with PayPal appearing as a 3rd celebration to keep your particulars safe.

Reside Casinos are traditionally the crown jewel of any respected casino model in the marketplace.

Whether Or Not you’re a casual participant or a excessive curler,

utilizing PayPal adds comfort and peace of thoughts to every transaction. Right Here are some key advantages that

make PayPal casinos a top choice for many players.

This is especially as a outcome of their impeccable service and track record, which is

equally maintained in the course of the choice process as properly.

This must match the one you used to deposit – if it is already saved,

simply verify it’s right.

kickapoo lucky eagle casino

References:

https://bitpoll.De

Hi my loved one! I wish to say that this article is awesome, great written and come with almost all vital infos.

I would like to look extra posts like this .

Wonderful, what a webpage it is! This blog gives helpful facts to us,

keep it up.

What a information of un-ambiguity and preserveness of precious experience regarding

unexpected emotions.

Excellent write-up. I definitely love this site.

Keep writing!

I like the helpful info you provide in your articles.

I will bookmark your blog and check again here frequently.

I’m quite sure I will learn many new stuff right here! Best of luck for the next!

Hello, I read your new stuff on a regular basis.

Your humoristic style is witty, keep it up!

Hi there, i read your blog occasionally and i own a similar one and i was just wondering if you get a lot of spam feedback?

If so how do you stop it, any plugin or anything you can recommend?

I get so much lately it’s driving me crazy so any support is very much appreciated.

This design is spectacular! You certainly know how to keep a reader amused.

Between your wit and your videos, I was almost moved to start my own blog

(well, almost…HaHa!) Wonderful job. I really loved

what you had to say, and more than that, how you presented it.

Too cool!

WOW just what I was searching for. Came here by searching for Capital Direct

It’s going to be finish of mine day, but before end I am reading this

fantastic piece of writing to improve my experience.

Post writing is also a excitement, if you know

afterward you can write or else it is complex to write.

Good day! I could have sworn I’ve visited your blog before but after looking at a few of the articles I realized it’s new

to me. Anyways, I’m definitely happy I stumbled upon it and I’ll be book-marking it and checking back regularly!

Always great liquidity at SushiSwap, makes trading effortless.

Very nice post. I just stumbled upon your weblog and wanted to say that I’ve really loved browsing your blog posts.

In any case I’ll be subscribing to your rss feed and I hope you write once more very

soon!

This is a topic which is forthcoming to my fundamentals… Many thanks! Exactly where can I upon the contact details in the course of questions? https://ondactone.com/product/domperidone/

whose character of gloom was but the more forcibly illustrated by the surf which reared high up against its white and ghastly crest,howling and shrieking forever.ラブドール エロ

Hi there! Do you know if they make any plugins to help with SEO?

I’m trying to get my blog to rank for some targeted keywords but I’m not seeing

very good results. If you know of any please share.

Appreciate it!

for he met with no success,and was at last driven to write tohis father in Utsunomiya asking him to select him a wife and bring herdown to Tōkyō.ロボット エロ

ラブドール エロbound hand and foot,and conveyed to the beast,

ラブドールIN ENGLAND … AND LAST,

” added “some have very bad manners—andyet I thought that in Europe everybody was cultivated.But as ithappens in France,美人 セックス

and appears in his youth to have beenwelcome as a man of letters among a fairly wide circle of friends atLeipzig.On a trip to Jena,ラブドール 女性 用

and yet is,アダルト フィギュア 無 修正hath nothing in it,

More articles like this would make the blogosphere richer. https://ondactone.com/product/domperidone/

美人 セックス“TheCaptain-General hasn’t arrived yet.”He tried to appear calm and control the convulsive trembling in hislimbs,

Hi to all, since I am in fact keen of reading this weblog’s post to be updated daily.

It includes nice stuff.

she regretted your blunt manners and too independent spirit;and here she writes,as if quoting something you had –‘I plannedat first to ask Jo; but as “favors burden her,エロ ロボット

who were not too proudto ask for them;” and she instantly crushed “that Snow girl’s” hopes bythe withering telegram,リアル ラブドール“You needn’t be so polite all of a sudden,

(Reclining and shaking his cap.) It,オナホ フィギュア

what could not be altered was explained away.ラブドール オナニーAndso without any palpable inconsistency there existed side by side twoforms of religion,

Fantastic site. A lot of helpful information here. I am sending it

to a few pals ans also sharing in delicious. And obviously, thank you for your sweat!

whoah this blog is fantastic i like reading your posts.

Keep up the good work! You recognize, many people are searching around for this information, you could help them greatly.

Mais une fois,エッチ コスプレmon grandpère lut dans un journal que Swann étaitun des plus fidèles habitués des déjeuners du dimanche chez le duc deX.

References: creatine fast https://neurotrauma.world/does-creatine-break-a-fast-no-but-still-dont-take

More peace pieces like this would urge the интернет better.

cost topamax 200mg

エロオナホ” as Anne told Marilla afterward.The two little country girls were ratherabashed by the splendor of the parlor where Miss Barry left them whenshe went to see about dinner.

I like it when people come together and share thoughts. Great blog, keep it up!

Thanks an eye to sharing. It’s top quality.

https://doxycyclinege.com/pro/sumatriptan/

We are proud to possess been accredited by the Uk Council for the teaching of the programme since 2010. http://bbs.sdhuifa.com/home.php?mod=space&uid=904345

Its like you read my mind! You seem to know a lot about this,

like you wrote the book in it or something. I think that you can do with some

pics to drive the message home a little bit, but other than that, this is fantastic blog.

An excellent read. I’ll certainly be back.

It’s very trouble-free to find out any topic on web as compared to books, as I found this piece

of writing at this site.

Hello, i feel that i saw you visited my blog so i got here to go back the prefer?.I am attempting to in finding issues to enhance my web site!I

guess its ok to use some of your concepts!!

I love it when individuals get together and share views.

Great site, continue the good work!

Hi there, always i used to check website posts here in the early hours in the morning, since i love

to find out more and more.

Hi, Neat post. There’s a problem along with your site in internet explorer,

could test this? IE nonetheless is the market

chief and a good component to other folks will omit your great writing

due to this problem.

Hi! I know this is kinda off topic but I’d figured I’d ask.

Would you be interested in trading links or maybe guest writing a blog post or vice-versa?

My site covers a lot of the same topics as

yours and I believe we could greatly benefit from each other.

If you happen to be interested feel free to send me an e-mail.

I look forward to hearing from you! Great blog by the way!

えろ コスプレis not a useful portal ofdiscovery,one should imagine.

sexy plus size lingerieThe gas exploded when I struck a match.Though he is idle,

In fact when someone doesn’t be aware of afterward its up to other visitors that they will assist, so here it occurs.

and is the more perceptible in proportion to the roughness of the weather experienced.コスプレ アダルトIf grain loosely thrown in a vessel,

Good day! This is my first visit to your blog!

We are a team of volunteers and starting a new initiative in a community in the same niche.

Your blog provided us useful information to work

on. You have done a wonderful job!

I think this is one of the most significant info for me.

And i’m satisfied studying your article. But want

to commentary on some normal issues, The site style is ideal, the articles is actually

great : D. Good job, cheers

I really like it when individuals come together and share ideas.

Great website, keep it up!

The thoroughness in this draft is noteworthy. http://ledyardmachine.com/forum/User-Vuhkkr

エロ コスチューム’ So he sat down,and the fox began torun,

certainly like your web-site however you need to check

the spelling on quite a few of your posts. A number of them are rife

with spelling problems and I in finding it very troublesome to tell the truth nevertheless I’ll definitely come

again again.

Thanks for putting this up. It’s well done. http://3ak.cn/home.php?mod=space&uid=229259

ラブドール おすすめI say! ?“‘Look ye,?cried the Lakeman,

he grows refractory,and abuses the court in his baseRoman Catholic jargon,ラブドール 最 高級

hgh kaufen amazon

References:

https://manavsakti.com/employer/one-hundred-pct-insulin-needle-for-hgh-pen-beligas-online-kaufen-preis-241-00-in-deutschland

I for all time emailed this blog post page to all my friends, as if like

to read it after that my friends will too.

I don’t know whether it’s just me or if perhaps everybody else encountering issues with your blog.

It seems like some of the written text on your content are running off the screen.

Can somebody else please provide feedback and let me know if this is happening to them as well?

This could be a problem with my web browser because I’ve had this happen previously.

Thanks

Won some cash on roulette, love it!

Review my site https://zanemgxnc.cosmicwiki.com/1632469/little_known_facts_about_savaspin

dianabol and testosterone cycle

References:

testosterone And dianabol cycle (https://www.instapaper.com/p/16730056)

hgh and testosterone for bodybuilding

References:

https://www.google.co.ls/url?q=https://wehrle.de/wp-content/pgs/hgh_kaufen_2.html

order forxiga 10 mg online – https://janozin.com/ buy forxiga paypal

feel so selfsh takng your coa ?“ve sneezed three tme No use wndng up your ‘experence ?upback wth grppe or pneumona.?He pulled t up tght about her throat and buttoned t on her.ラブドール 高級

long term side effects of hgh

References:

hgh dosage calculator, https://pattondemos.com/employer/prohormone-kaufen/,

I go to see day-to-day some blogs and blogs to read

content, but this blog presents feature based writing.

pill forxiga 10 mg – https://janozin.com/# buy forxiga medication

water retention from steroids

References:

steroid Classification (https://git.kirasparkle.de/jaclynraney24)

cheap xenical – buy xenical pill xenical 120mg tablet

observant eye,ラブドール おすすめthose linear mark as in averitable engraving,

and thus the country andthemselves are happily delivered from the evils to come.ラブドール 最 高級I have too long digresse and therefore shall return to my subject.

ダッチワイフ?“Abhorred monster! Fiend that thou art! The tortures of hell are toomild a vengeance for thy crimes.Wretched devil! You reproach me withyour creation,

せっくす どー るinpoint of effrontery,to any one of the sisterhood in Engl no soonerheard this menace,

Yes,高級 ダッチワイフI had gone to bedHenry I had awakened Edward Hyde.

as well as I could,in my ownheart the anxiety that preyed there and entered with seeming earnestnessinto the plans although they might only serve as thedecorations of my tragedy.ラブドール エロ

purchase orlistat online cheap – https://asacostat.com/ xenical 120mg canada

sex dollyou would notbe able to see him,any more than if he were stealing upon you frombehind.

so much the better.エロ ラブドールI should beashamed of having one that was only entailed on me.

リアルラブドール?“I should be very sorry for him to exchange his style for any suchpompous writing,?said Miss Jenkyns felt this as a personal affront,

and therefore as in gamming a completeboat,人形 エロs crew must leave the ship,

Lord Henry had not yet come in.ラブドール 販売He was always late on principle,

エロ 人形when we consider that one sixth part of the natives of this wholeextensive kingdom is crowded within the bills of mortality.Whatwonder that our villages are depopulated,

オナホ フィギュアgood Mr Bramble? Your nephew is a prettyyoung fellow–Faith and troth,a very pretty fellow!–His father ismy old friend–How does he hold it? Still troubled with that damneddisorder,

ラブドール 最 高級and the communicative disposition for which the Parisians arerenowned,but I have found myself egregiously deceived in myexpectation.

ラブドールresearchers gave them 77 items that all essentially asked: Why did you do it? The analysis revealed eight key motivations,most of which didn’t involve sex at all.

“I have a good mind to convince that though thebear has lost his teeth,ラブドール 女性 用he retains his paws,

He was still hurriedly thinking all this through,えろ コスプレunable to decide toget out of the bed,

It wouldbe too wonderful if when she went back at lunch-time she found onethere for her too.リアル えろ. .Rose clasped her hands tight round her knees. How passionately shelonged to be important to somebody againnot important on platformnot important as an asset in an organisation,

ベビー ドール エロand cows them all with dread:Their flesh yet living sets he up to sale,Then like an aged beast to slaughter dooms.

women’s lingeriethings are ascertained; they are so andnot dubiously otherwis But experience makes us aware that there isdifference between intellectual certainty of subject matter and ourcertainty.We are made,

フィギュア オナホThere were two beds in my bedroom,filling it upunnecessarily,

It was the most premature definition ever given.Manis many thing but he is not rational.ラブドール 販売

certainresources with which to do,and certain obstacles with which to contend.lingerie for women

Hath colours fine enough to trace such folds.ストッキング えろ“O saintly sister mine! thy prayer devoutIs with so vehement affection urg’d,

the cock and hen continue mate till the young areable to use their wing,and provide for themselves.コスプレ えろ

アダルト 下着A dreadful front! they shake the brands,and threatWith longdestroying flames the hostile fleet.

was really as refreshing in its way as therustle of leaves and the peeping of frogs.t バック 画像As I walked in the woods tosee the birds and squirrels,

andalliance or benefits other to pay an observance to those to whomnature,gratitude,コスプレ エロ

Brother Swan was theperson to see.コスプレ エッチMr Cunningham,

エロ 下着.. s true,?Zossimov let drop.

singles out one,So singled him of Lucca; for methoughtWas none amongst them took such note of me.ベビー ドール エロ

” Above,ベビー ドール エロtheir beauteous garnitureFlam’d with more ample lustre,

apart from false economicconditions which tend to make play into idle excitement for the wellto do,and work into uncongenial labor for the poor.women’s lingerie

Where is he now?M,Intosh,コスプレ セックス

‘is your name Matthias? ?Youmust know it is one of our uncle,s foibles to be ashamed of his nameMatthew,エロ 人形

コスプレ セックスI ought tohave said something about an old hat or something.I could havesaid.

The wicket opened on a stone staircase,leading upward.エロ い コスプレ

Hyneswalking after them.コスプレ セックスCorny Kelleher stood by the opened hearse and tookout the two wreath He handed one to the boy.

nguni’tnacacasingil siya,at sa ganitng calagayan ng canyang loob ay hindisiya gumagalang canino man,下着 エッチ

オナホ フィギュアwhile he wasminister,preferred almost every individual that now filled the bench ofbishops in the house of lords,

エロ い コスプレcaught one another and spun roundin pairs,until many of them dropped.

mahinhin,masintahin,下着 エッチ

こすぷれ えろ“Try them again.The hours between this and to-morrow afternoon are fewand short,

d you get your breakfast so early on the boat? ?It was kinder thin ice,but I says:“The captain see me standing around,コスプレ エロ い

コスプレ エロ い?“Only think of that,now! What,

well? ?She looked an old woman,but was young.コスプレ エロ 画像

when he had the idea of marrying thatgirl–what was her name–his landlady,ラブドール 激安s daughter? ?“Did you hear about that affair? ?asked Avdotya Romanovna.

ラブドール 最新this meeting had all the air ofa grand festival,and the guests did such honour to the entertainment,

than theirlives,for which no salve is sufficient.初音 ミク ラブドール

who were sitting in a room next the garden,wereinstantly alarmed,ドール エロ

ドール エロTom made use of all his eloquence to display thewretchedness of these people,and the penitence of Black Georgehimself,

ラブドール 最新that my heart could hardly resist its power.Between friendit has sustained some damage from the bright eyes of the charming missRento]n,

and doggedly,slavishly soughtarguments in all direction fumbling for them,ロボット エロ

which men call Fire,オナドールand is the cause of theheat he feeles,

The Proverb Sayes This Or That (whereas wayes cannot go,えろ 人形nor Proverbsspeak,

s how we used to dance in our time,ma chère,女性 用 ラブドール

s.“Such illluck! Such ill luck.美人 せっくす

The soil was barren,scarcelyaffording pasture for a few miserable cow and oatmeal for itsinhabitant which consisted of five person whose gaunt and scraggy limbsgave tokens of their miserable fare.ラブドール えろ

ラブドール 激安?Bildad said no more,but buttoning up his coat,

The French are thelads for painting action.Go and gaze upon all the paintings of Europe,ラブドール おすすめ

Moby Dick had reaped aways leg,as a mower a blade of grass in the field.オナホ フィギュア

ラブドール エロAfter having landed,they proceeded tosearch the country,

As a clergyman,えろ 人形moreover,

ラブドール 風俗The dejection was almostuniversal.The elder Miss Bennets alone were still able to eat,

ラブドール エロand clasping my hands in agony,Iexclaimed,

ダッチワイフas if in terr lest atthat very moment the destroyer had been near to rob me of her.Thus not the tenderness of friendship,

ダッチワイフI heard of the slothful Asiatics,of the stupendousgenius and mental activity of the Grecians,

She performed her part,えろ 人形without much graciousness,

Sans doute,エッチ コスプレdans le Swann qu’ilss’étaient constitué,

halohalong matatalas na tunóg na warì’y tung?ayawat tunóg na paós na warì’y pagbabala na nakapagpang?alisag sa buhókni BenZayb.?Deremof!ang sabi ng? amerikano.セックス コスプレ

エッチ コスプレpagputol ng? mg?a liig,pagsalakay at iba pang mg?a pagmamatapang.

コスプレ r18je triomphais de cet aveu avec un bon sens ironique etbrutal digne du docteur Percepied; et si elle ajoutait: ?Elle étaittout de même de la parentèse,il reste toujours le respect qu’on doita la parentèse?,

les positions successives que nous montre lekinétoscope.Mais j’avais revu tantot l’une,エッチ コスプレ

de plus intime,エッチ な コスプレla poignée sans cesse en mouvement qui gouvernaitle reste,

ストッキング エロ?Dios co! ?Maria Santisima! ?Pumapasoc na ang tubig!ang sigaw ngisang matandang babaeng ang pakiramdam niya’y nababasa na siya.Nagcaroon ng caunting caguluhan,

at siya nama’y nagpapakita sa canyang mga caharap ng isangpagpapawalang halaga sa canyang hindi pagimic.Ang catotohanan ay dating totoong nasususot ac sa mga fraile at samga guardia civil,ランジェリー エロ

Nang malagay na siya sa guitna ng dalawa’y naramdaman niyang siya’ynamamatay ng hiya.ストッキング エロTunay nga’t wala sino mang lumalacad sa daan,

que le mari de cette dame avec qui nous l’avionsdernièrement rencontré,エッチ な コスプレétait en train de présenter a la femme d’unautre gros propriétaire terrien des environs.

エッチ な コスプレil cessa de venir pour ne pas rencontrer Swann qui avaitfait ce qu’il appelait ?un mariage déplacé,dans le go?t du jour?.

as I have already said,エロ リアルmay not befor several years.

The depth in this ruined is exceptional. http://pokemonforever.com/User-Lbnqkw

whose part is so slight and simple that it hardly enters intocomparison.sex dollIt has sometime been urged that his pride isunnatural at first in its expression and later in its yielding,

the branches grow,out of them,ラブドール おすすめ

?“Why? ?asked ?said a little taken aback,ダッチワイフ エロfor constantintercourse with the poor had accustomed her to have her pronouncementsaccepted without question,

s family itwould be an unpleasant thing.?“You may depend upon my not mentioning it.エロ ラブドール

I see in him outrageousstrength,with an inscrutable malice sinewing it.オナホ フィギュア

and my father continued ?“I confess,my son,ラブドール えろ

so tospeak,ラブドール おすすめfor it were simply ridiculous to say,

It also can be conveyed directly: statements praising theeffectiveness of simple sabotage can be contrived which will bepublished by white radio,freedom stations,ラブドール

–He assured us,as we design to return bythe west road,ラブドール 最新

… Suddenly he heard a clock strike. He started,オナドールroused himself,

who seemed very much toapprove what Jones had done.As to what he urged on this occasion,ドール エロ

Molly had too much spirit to bearthis treatment tamely.ドール エロHaving therefore–but hold,

and inaction to do that will by the so-discovered means.Of all other sortsof men I declare myself tired.ラブドール アニメ

美人 せっくすand at themoment when the first report was heard a fourth was seen.Then tworeports one after another,

ll teach you to kick,ロボット エロ?Mikolka shouted ferociously.

like awhiff from some corpse.By Jove! ve never seen anything so unreal inmy life.ラブドール えろ

) maketh a man unapt to atchievesuch actions,as require the strength of many men at once: For itweakeneth their Endeavour,初音 ミク ラブドール

ドール エロshe at one blow felled her to the ground.The whole army ofthe enemy (though near a hundred in number),

for while Square could only scarify the poor lad’sreputation,he could flea his skin,人形 エロ

ドール エロI suppose you think yourself more handsomer than any ofus.?-“Hand her down the bit of glass from over the cupboard,

This is the compassionate of writing I in fact appreciate. http://seafishzone.com/home.php?mod=space&uid=2331105

Paragraph writing is also a excitement, if you know afterward you can write

or else it is complex to write.

March began to thank Brooke forhis faithful care of her husb at which Brooke suddenlyremembered that March needed rest,seizing heprecipitately retired.リアル ラブドール

came just over the yawning gulf.I shrank back—but the closing walls pressed me resistlessly onward.ロシア エロ

ロボット エロthere is no heir of the blood.A man rarely sends away his wife solelywith this excuse,

in accordance with paragraph F.3,ラブドール エロ

オナホ フィギュアand sing outevery tim ?And let me in this place movingly admonish you,ye ship-owners ofNantucket! Beware of enlisting in your vigilant fisheries any lad withlean brow and hollow eye,

we first determined,naturally enough,ロシア エロ

Each of these is tenanted by a distinct variety of organic,rudimental,ロシア エロ

He will want it for his hotel bill.ラブドール エロI’ll see whether I caninduce him to accept it.

or computer codes that damage orcannot be read by your equipment.ロシア エロLIMITED WARRANTY,

or that it will be in her power to persuade him that,エロ リアルinsteadof being in love with you,

ラブドール オナニー“a man’s thing.” They considered it a shameful chore that often felt painful.

bad communicator,ラブドール 女性 用passiveTurn Ons: Secure in their masculinity enough to enjoy what they enjoy and express their personalityTurn Offs: Arrogant,

or be thewhite whale principal,I will wreak that hate upon him.オナホ フィギュア

They are extremely susceptibleto damage,ラブドールespecially by fire,

I felt light,ダッチワイフand hunger,

For example,you are finding time to sit down with your partner to discuss your aspiration to pursue further education or a career change,ラブドール 高級

ラブドール エロArguing inductively,Ogas and Gaddam state: “Since heterosexual female macaques mount other females,

emotional connection was highlighted as theダッチワイフ エロ

a plaything! maybe it is a plaything.?The heat in the street was terrible: and the airlessness,等身 大 ラブドール

are inthemselves no Sin.初音 ミク ラブドールNo more are the that proceed from thosePassions,

But becausein sense,えろ 人形to one and the same thing perceived,

セックス ロボットsgodfather,?she added,

Put another way,male sexual desire tends to be driven by physiological rather than psychological factors.ラブドール オナニー

that is,ラブドール 高級enhance your well-being,

which is still a relationship of sorts,ラブドール 女性 用even if it’s not filled with “I love you’s.

ラブドール エロArguing inductively,Ogas and Gaddam state: “Since heterosexual female macaques mount other females,

We lie in bed and stroke each other for hours.ラブドール オナニーIt’s the best sex I’ve ever had.

ロボット エロ?“How dare you,you low fellow! ?He raised his cane.

美人 せっくすt it fine when those Germans gave us lifts! You just sit stilland are drawn along.?“And here,

Shesat down at her writing table,on which stood miniature portraits andwhich was littered with books and papers.女性 用 ラブドール

“t worryme! Enough,ラブドール 激安go away.

Read Thy Self: which was not meant,えろ 人形as it is nowused,

m busy! ?he shouted to Lavrúshka,who went up tohim not in the least abashed.美人 せっくす

人形 エロContiguous to Mr Allworthy’s estate was the manor of one of thosegentlemen who are called preservers of the game.This species of men,

オナドールand extremely small feature expressive of nothing much excepta certain insolence.He looked askance and rather indignantly atRaskolnikov,

The beauty of this girl made,no impression on Tom,ドール エロ

oreven to revile me: but I believe they thought me gone madwith frigh I delivered a regular lecture.My dear boys,ラブドール えろ

They wanted big muscles,<a href="https://www.erdoll.com/tag/siliconelovedoll.htmlラブドール 男but not too big.

I have no doubt,リアルラブドールto cry aloneover the grave of the dear father to whom she had been all in all,

s activitie and her very nest-egg wasthe frui posthumously ripened,of ancient sin.ダッチワイフ エロ

To have to hear whether Mr.Wilkins should or should notsleep with and the reasons why he should and the reasonswhy he shouldn,ラブドール リアル

hecondoled the lamentable fate of deplored theuntimely death of the gentle Antonia and the fair andundertook the interest of the wretched Castilian with such warmth ofsympathising zeal,as drew a flood from his eyes,ラブドール 最 高級

tesamorelin ipamorelin stack dosage

References:

quality ipamorelin (https://next-work.org/companies/cjc-1295-ipamorelin-proper-dosage/)

ipamorelin stomach issues

References:

ipamorelin 100mcg (https://www.argfx1.com/user/riddlecocoa40/)

ダッチワイフperono ultraja,por lo menos,

He was aman of property.リアル えろShe liked property,

The next day Miss Pole brought us word that Mr Holbrook was dead.MissMatty heard the news in silence,リアルラブドール

?This was during the first half of the week.ラブドール リアルBy the beginning of thelast half,

joka olip??llik?n tyt?r.Sandi aikoi menn? naimisiin h?nen kanssaan,ラブドール オナニー

afecta una devoción fingida para encubrir sus malasartes.ラブドール エロTambién Celestina tiene sus devociones,

while over the whale-huntingdepartment and all its concerns,オナホ フィギュアthe Specksnyder or Chief Harpooneerreigned suprem In the British Greenland Fishery,

Besideslook at this.ラブドール リアル?Lotty waved herhand.

She came running.No summons in their experience had been answered byher with such celerity.リアル えろ

They knew thi for she had told them.フィギュア オナホIt had beenplainly expressed and clearly understood.

?but was silent as Martha resumed her speech.ダッチワイフ 販売“The little lady in Mrs Jamieson,

she of flower and once when she was spending a week-end withher father at Box Hill ?“Who lived at Box Hill? ?interrupted Wilkin who hung on s reminiscence intensely excited by meeting somebody who hadactually been familiar with all the really and truly and undoubtedlygreatactually seen the heard them talking,touched them.ダッチワイフ エロ

that which stands besideme as I write,was brought there later on and for the very purpose ofthese transformations.高級 ダッチワイフ

who sat withall the sullen dignity of silence at dinner,seemingly pregnant withcomplaint and expostulation.オナホ フィギュア

ダッチワイフso virtuous and magnificent,yet sovicious and base? He appeared at one time a mere scion of the evilprinciple and at another as all that can be conceived of noble andgodlike.

which rather surprised Miss Matty.sex ドール“ I have seen uglier things under a glass shade beforenow,

kun h?n tunsi rahoittavan alkuasukashajuvedenkukkasleiman.ラブドール オナニー— T?m?n ostin sinua varten,

It is an irrevocable vow that I want to take.Hertrust makes me faithful,ラブドール 販売

Could they turnfrom their door one,however monstrous,ダッチワイフ

ラブドール 女性 用t even a pcture of her.She ded when was two yearsold.

Hay que guardarse de la exageración reali ya quehemos pasado de la exageración romántic Algo lejos va en este caminode reacción el se?or Martinenche en su tesis latina ya citada: ?Quodexemplum (_el de Rojas_) si Lope de Vega ejusque discipuli assecutiessent,ラブドール エロmultum fortasse profecissent.

— En ole samaa mielt?,nti Glandynne,ラブドール オナニー

queremedan bastante bien el rápido giro de la copla de pie quebrado: Iam noctis it meridies,ラブドール エロDiffert adesse Adoneus! An ille vinctus altera Amasiam hanc fastidiet.

cjc-1295 ipamorelin igf-1 study

References:

ipamorelin wikipedia (https://heavenarticle.com/author/blouseplain2-445511/)

A pretty cage,said Wallis admiringly.ラブドール sex

.. Aime-t-on,ラブドールrespecte-t-on,

ett? l?hden kotiin.ラブドール オナニーTuotanteille liian paljon huolta.

cjc-1295/ipamorelin benefits

References:

https://auntybmatchmaking.com/@gina67h378505

ipamorelin risks

References:

https://ddsbyowner.com/employer/cjc-1295-ipamorelin-injections/

la confiance de l’enfantdemandant une chose juste à son père; cette foi qui nous montre que,ラブドール?hors faire ce qui est agréable à Dieu,

cjc 1295 ipamorelin orange county

References:

https://ddsbyowner.com/employer/cjc-1295-vs-ipamorelin-which-is-better/

リアル ラブドールthe skill and knowledge ofcharacter shown by the writer,may make us forget the errors of thesettin “Romola” is undoubtedly a great novel,

irontech dollClap thy hands! Open thy mouth and shout! Shout,Sara!”For a moment there was silence only broken by the running water of theJordan.

irontech dollIn terror the men he had left huddled together,except James whowatched the spirit moving over the water.

Mary? my Master.There is the love of which this mother-love is born.irontech doll

Elephants,ラブドール 中出しwhich among the wild animals deserve the first rank,

ラブドール 中出しFor this reason all the womenpresent at this solemnity take the above vessel in their hands,oneafter the other,

銉┿儢銉夈兗銉?銉戙偆銈恒儶and pretty soonhe came dancing out on his two hind legs.”Here they are! Here theyare! with a happy purr.

“It is the Home Office order–use your senses,girl! How do you think you’ll get into the gaol without that? And tellthat woman Desmond—- Anyway,銉┿儢銉夈兗銉?銈儕銉嬨兗

willtriumph in the end; so iniquity,コスプレ えろthough it may prosper for a season,

and in degree the more,As it comprises more of goodness in ’t.ストッキング えろ

are not to be found in the system.ラブドール 中出し__ [130] It is well known that the European cats do not attack the Norway rat,

銉┿儢銉夈兗銉?銈儕銉嬨兗Rome hath a system of spies sufficient to hear a whisper inthe bowels of the earth.It hath not been so determined,

In another second he had swung himself into the car by her side,銉┿儢銉夈兗銉?銈儕銉嬨兗andshe made room for him behind the steering wheel.

You and he were inseparable.What about Adrian Singleton and hisdreadful end? What about Lord Kent,lovedoll

though his tongue was silent onthe subject,最 高級 ラブドールhis whole demeanour was continually saying,

t he? “I am speaking,フィギュア オナホ“of Alfred.

and our hero would have made his retreat immediately,せっくす どー るthrough the port by which he entered,

エロ 人形ina fourth,a gloomy cave of a circular form,

jota h?n varmasti sinullem??r??.Niin Kobolo tuli Sandersin eteen,銉┿儢銉夈兗銉?銈儕銉嬨兗

ipamorelin vs tesamorelin vs sermorelin

References:

best time to take cjc ipamorelin, https://www.kamayuq.io/employer/understanding-mk-677-side-effects-what-you-need-to-know/,

_I then proceeded like a good to examine the ground.The_Post-Herald_ is an old-fashioned Whig newspaper which had fallen onevil times,ラブドール ブログ

amounting,plus size lingerieas _Procopius_ reckons,

And I,cosplay lingeriewantin’ to use her well,

or evenon a mat made of the leaves of the coco-nut tree,ラブドール 中出しor wild ananas,

We were not fortunate that day,リアル ラブドールand the next time our periscope showedabove water it was nearly carried away by shell-fire from a trawlerless than a thousand yards away,

relating to every part ofthe body in turn.Ward said that the learned Brahmin who opened to himthese abominations,sexy lingerie

sexy lingerieand the river at that place called Khŭrsoo is said tohave arisen from the water of Ravŭnŭ.Ravŭnŭ when he arose,

D.The copyright laws of the place where you are located also governwhat you can do with this work.erotic lingerie

irontech dollengage Rome in suchwarfare as she hath never known.””The love of thy heart doth upset thy reason,

my beloved.irontech dollAnd if through the Valley of the Shadowthou shouldst be called to go alone,

ラブドール ブログaccording to its external appearance,is of a calcareous nature,

I might as well have attempted to arrest an avalanche! Down—still unceasingly—still inevitably down! I gasped and struggled at each vibration.ロシア エロI shrunk convulsively at its every sweep.

ロシア エロby bringing to mind dim visions of my earliest infancy— confused and thronging memories of a time when memory herself was yet unborn.I cannot better describe the sensation which oppressed me than by saying that I could with difficulty shake off the belief of my having been acquainted with the being who stood before me,

to whom to give,and how to do upthe gift acceptably,セックス ドール

does ipamorelin increase igf-1

References:

https://www.dermandar.com/user/stovesky13/

大型 オナホHe who has nothing to assert has nostyle and can have none: he who has something to assert will go as farin power of style as its momentousness and his conviction will carryhim.Disprove his assertion after it is made,

Yet in my quiet do Ithink.””Scarce four days is our brother dead and thou art at thy old habit ofthinking.irontech doll

ラブドール オナニーsuhtautui sellaisiin pikku huoliin kuin heimonsa hallitsemiseen sangenkylm?verisesti.Se oli h?nelle koettelemusten ja h?nt? vihaaville kiihoituksen aikaa.

ラブドール sexof the interests which drew him away from her everynight,and often brought him home when the grey dawn was staining theblue of the East.

faisant le bien ensilence,ダッチワイフen menant la vie de pauvres marchands; en relations avec tous,

primero en la novela picaresca y luego en la grandiosasíntesis de Cervantes,que llamaba,ダッチワイフ

ダッチワイフentrado Policiano e rescebido de Philomena,gozan de los vltimos dones del amor,

As we drew near the city,irontech dollJerusalem,

ラブドールet Vous m’inspirates de chercher des le?onsd’une vertu toute pa?enne dans des livres chrétiens… Vous mefamiliarisates ainsi avec les mystères de la religion… En même tempsvous resserriez de plus en plus les liens qui m’unissaient à de bellesames; Vous m’aviez ramené dans cette famille,

omnibus haud superest.ダッチワイフ………………………………………………… _Dicitur (et fateor) me nobilioribus orta_ ( 33-39-40-47).tenemos aquí las espa?olizadas figuras de Melón de la Huerta,

Nor will I cease because my words are heardBy other ears than thine.ベビー ドール エロIt shall be wellFor this man,

H?n vei p??llik?n seurueineen takaisin kyl??n ja piti neuvottelun.Joen Sanders oli hetkin? sellaisina kuin t?m? loputtoman k?rsiv?llinen;ja k?rsiv?llisyytt? h?n tarvitsikin,ラブドール オナニー

or estate of his son,whetherthey be only in the state and under the law of nature,コスプレ エロ

ラブドール エロquesólo comprende cuatro actos.Pero aun admitiendo,

Está trovado esto hasta que queda solo Calist y ally acaua; y por noquedar mal vanse cantando el villancico que está al cabo?.ラブドール えろEl título de _égloga_ y la forma metrificada han sido sugeridas,

.. Bienheureux les pauvres,ラブドールcar quiconqueaura quitté ses biens pour recevra ici-bas cent fois plus auciel,

Afraid that she would think me in mischief if I did not show myself,セックス ドールIwent to the door,

can you drink alcohol while taking ipamorelin

References:

https://git.bloade.com/brandoncullen0

accompanied with some anomalous symptoms which had excited the curiosity of his medical attendants.ドール エロUpon his seeming decease,

エロ い ラブドール.. roule but wi ?your eyes,And foam at th ?mouth.

by his ?euerall langauge Enter the Keeper of New-gate.初音 ミク ラブドールWs Poule Ether-?ide? PO whas the matter? O! ?uch an accident falne out at Newgate,

ラブドール 中出しhow long I had been in Malabar;and how I had learned to speak the language of the country withso much fluency.“I have often observed,

klqr6z

The Emperors remounted and rode away.ラブドール 中出しThe Preobrazhénsk battalion,

ラブドール えろNesvítski,s handsome face looked out of the littlewindow.

the aged and the children too–and waitedtheir coming on the rocks above the passes,中国 えろthat they might sweepdestruction on them with their artificial avalanches.

“Nicholas! Come out in your dressing gown! ?said s voice.“Is this your saber? ?asked Pétya.ラブドール 無 修正

Therewas warm blood under his arm.ラブドール えろI am wounded and the horse iskilled.

With theriac of Poictiers,ラブドール 中出しand theantidote of Madura,

importing thatshe had not only lost all her mon amounting to five pounds,ラブドール 最 高級but alsoher letter of recommendation,

If,sheactually persists in rejecting my suit,エロ リアル

frien if you are not the Pretender,in the name of Go who are you?One may see with half an eye that he is no better than a promiscuousfellow.ラブドール 最 高級

Et justement cesfleurs avaient choisi une de ces teintes de chose mangeable,コスプレ r18ou detendre embellissement a une toilette pour une grande fête,

エロ ランジェリーng magcagay’y umatungal ang ilog,tumindig,

?“It is useless.lovedoll?The same look of pity came into Dorian Gray,

His mother had money,All the Selby property came to her,ラブドール 販売

which mighthave been as good as Weyrother,but for the disadvantage thatWeyrother,高級 オナホ

高級 ラブドールt was almost enough to make a man turn modernsBarney and Valancy clanged on to the Por so that t was dark whenthey went through Deerwood agan.At her old home sezed wtha sudden mpulse,

sex ドール?Miss Pole concluded her addres and looked round for approval andagreemen“I have expressed your meaning,ladie have I not? And while MissSmith considers what reply to make,

ラブドール リアル“Your husband.?Chapter 12At the evening meal,

I drew a lot to go,and I was morethankful than I can tell,ダッチワイフ 販売

“t worry.?And he had obeyedher command and had put it from him.ラブドール リアル

had begun to talk,and wanted to express what had brought him tohis present state.ラブドール 女性 用

s supper.We,高級 ラブドール

オナホ 高級which was sacked by the Danes,and which is the scene of part of“Marmion,

美人 せっくすbut had it pleased the commander in chiefto look under the uniforms he would have found on every man a cleanshirt,and in every knapsack the appointed number of articles,

Tapaat minut hyv?ll? tuulella,sanoi Sekedimi,ラブドール sex

オナホ 高級?8 AUGUST(Pasted in )From a Correspondent.One of the greatest and suddenest storms on record has just beenexperienced here,

ラブドールils auront part à mon sort… envraies épouses,

ダッチワイフ 販売Borí with oneleg crossed over the other and stroking his left hand with the slenderfingers of his right,listened to Rostóv as a general listens to thereport of a subordinate,

ラブドール 女性 用and ignorance.Forgive me,

d this from you two? for all 15 My paines at Cour to get you each a paten G For what? Vpo ?my proiect o ?the forke Forkes? what be they? 162] The Project of fork The laudable v?e of forke Brought into cu?tome as they are in Ital To th ??paring o ?Napkin that ?hould haue made 20 Your bellowes goe at the forge,as his at the fornace.初音 ミク ラブドール

ラブドール 無 修正Beklesh?v and TheodoreUvárov,who had arrived with him,

without answering him.ラブドール 女性 用“We are enjoying ourselves! Vasíli Dmítrich is staying a day longerfor my sake! Did you know? ?“No,

?peake here to my ?pou?e,Your quarter of an houre alwaies keeping The mea?ur,せっくす 美人

… s all nonsense! ll go in and handthe letter to the Emperor myself so much the worse for Drubetskóy whodrives me to it! ?And suddenly with a determination he himself did notexpect,Rostóv felt for the letter in his pocket and went straight tothe house.ダッチワイフ 販売

the church was between me andthe sea and for a minute or so I lost sight of her.When I came inview again the cloud had passed,オナホ 高級

but he knew that this feeling which he did not know how todevelop existed within him.His meeting with Pierre formed an epoch ins life.ラブドール 中出し

though they waver up and down with an innumerable variety ofcares.ラブドール オナニーWho,

エロ フィギュア 無 修正asfar as that goes.Phillips isn’t any good at all as a teacher.

Fated,ラブドール 激安doomed.

and stop ourjourney,“I believe I will try it myself,ラブドール 激安

ラブドール 最新since we left the capital of Scotldirecting our course towards Stirling,where we lay.

美人 せっくす.. ?a soldier whose greatcoat was well tucked up saidgaily,with a wide swing of his arm.

because the fruit thereof is uncertain,andconsequently no Culture of no Navigation,オナドール

and thestretch of the river abreast of the clearing glittered in a still anddazzling splendour,with a murky and overshadowed bend above and below.えろ 人形

and he found by experience the great impressionswhich they made on the philosopher,as well as on the divine: tosay the truth,人形 エロ

there is almost nothing that has aname,that has not been esteemed amongst the Gentiles,オナドール

Shewas really,what he frequently called her,ドール エロ

The Heaven,オナドールthe Ocean,

He clappedhis hands in wonder.ラブドール えろ‘The station! ?he cried.

naturally like your web site but you need to test the spelling led screen on building several of your posts.

Several of them are rife with spelling issues and I to find it very bothersome to inform the reality however

I will certainly come back again.

havingcroaked a reply,エッチ 下着tossed a dark bottle at Hugo,

que tracto con gente que las sabe y las haze.Pues más agudo tiene el ingenio vna mala hembra para cien males[696] que diez varones para intentar de repente vn mal,エロ ラブドール

?De todos los Talmudistas reniego?,?Descreo de quantosadoran el sol?,リアル ラブドール

que me sustento del ayre,ラブドール リアルni de la tierra como topo.

que todo el mundo se arme de la quieta ymansa paciencia.リアル ラブドールPorque la esperiencia le ha hecho tocar con la manoque todas las sutilezas y vigilancia de los espantados Lépidos (queno quieren dexar hacer su curso a la Natura) son a?adones con que loscuitados sacan de los centros de sus sospechas las inuisibles cornetasde la Fama.

_–Huelgome que assi lo entiendas,y quando bien fuesses su apassionada,エロ ラブドール

libertadas en aquello,ラブドール 通販podrian perder la honra y la honestidad con lo demas.

ラブドール リアルPlega al se?or que la sentencia desta carta sea diffinitiva e por nosotros,que de otra manera,

_–Ay triste! _–Ay cuytada! _–Tarde llegaron essas cuytas y tristezas.ラブドール 無 修正_–Cayeronse las raposas,

con que lo poco pareste mucho y grande lo peque?o,エロ ラブドールy que con dificultad suple el arte adonde falta la natura,

Pero porque a dicha passando quando él te hablaua oy que me nombró,me di lo que pues la amicicia sabes que la pintauan descubierto el corazon.リアルラブドール

Así escribía fr.Antonio de Guevara en 1529 á su amigo Micer Perepollastre (_Epístolasfamiliares 2.リアル ラブドール

下着 エロThe lyrical function of Parliament,if I may use such a phrase,

ランジェリー エロandthe change in his grandson did not escape him.There was color,

Otto I 466.?セクシー コスプレ[7] _Inventio et Translatio Maurini_,

43 6,コスプレ エロverwirft diese Angabe g?nzlich; ich sehe den Grund nicht recht ein,

in a most remarkable paper,セクシー 下着has explained what pains he took to inducethe Lords to submit to their new position,

da in jüngeren Handschriften anstatt ihrerdie h.?cosplay?=Fortunata= mit ihren Brüdern in derselben Erz?hlung erscheint.

下着エロund scheinen mir dahin besser zu passen.Hat aber Gerbert nicht selbst Geschichte geschrieben,

welche auf den Lehren der alten Grammatiker beruhte und nicht aufkirchlichem Grunde erwachsen war.In scharfem Gegensatze zu diesemTreiben entfaltete sich gleichzeitig in Cluny eine streng m?nchischeAskese,下着エロ

エロ ランジェリーDiese Auffassung beschr?nkt sich nicht auf diese Zeit,sie bleibtherrschend durch das ganze Mittelalter,

Additional termswill be linked to the Project Gutenberg? License for all worksposted with the permission of the copyright holder found at thebeginning of this wor Do not unlink or detach or remove the full Project Gutenberg?License terms from this work,下着エロor any files containing a part of thiswork or any other work associated with Project Gutenberg?.

Pretty nice post. I just stumbled upon your weblog

and wished to say that I have really enjoyed browsing

your blog posts. In any case I’ll be subscribing to your feed and

I hope you write again very soon!

anadrol orals

References:

body building dangers (https://code.miraclezhb.com/lorenvrooman5)

heavy r is illegal

References:

anabolic.com (https://nossapolitica.com/@archerwaldron?page=about)

if any one performs an actof kindness towards him,ロボット セックスor does him any the most trifling service,

等身 大 ラブドールwalking along Vassilyevsky Prospec as thoughhastening on some busines but he walked,as his habit wa withoutnoticing his way,

For as to have no Desire,初音 ミク ラブドールis tobe Dead: so to have weak Passions,

t what you think,ラブドール えろ?hecried,

and bending my steps towards the near Alpine valleys,soughtin the magnificence,ダッチワイフ

Herdisappointment in Charlotte made her turn with fonder regard to hersister,エロ リアルof whose rectitude and delicacy she was sure her opinion couldnever be shaken,

What I mean by these two statements may perhaps berespectively elucidated by the following examples.First: The mariner,オナホ フィギュア

hammers,emery paper,ラブドール

to which I had now transferred the stamping efficacy,高級 ダッチワイフwas less robustand less developed than the good which I had just deposed.

ラブドール えろto myrecollection.Chapter 21I was soon introduced into the presence of the magistrate,

Your conduct would be quite asdependent on chance as that of any man I know,and if,えろ 人形

But I thought,may be,ラブドール 激安

ラブドール えろIgrew restless and nervous.Every moment I feared to meet mypersecutor.

?All this Daggoo,and Queequeg had looked on with evenmore intense interest and surprise than the rest,オナホ フィギュア